Companies found violating the rules could incur hefty fines of up to 10% of their global annual revenue.

The UK's significant Online Safety Act is now in effect, introducing stricter online content moderation and holding tech giants such as Meta-owned Facebook and Instagram, Google, TikTok, and other social media platforms. This legislation grants Ofcom, the UK's media regulator, the authority to implement tougher rules regarding how these companies manage harmful and illegal content on their platforms.

The Online Safety Act, enforced last year, is designed to make a safer online environment, especially for children. Ofcom has released its initial codes of practice, which outline the necessary actions tech companies must take to tackle illegal activities such as terrorism, hate speech, fraud, and child sexual abuse.

Under this Act, tech companies are required to uphold a 'duty of care' to shield users from harmful content. They have until March 16, 2025, to evaluate the risks associated with illegal content on their platforms and to put in place measures to mitigate those risks.

This involves enhancing content moderation, streamlining reporting processes, and integrating safety features directly into platforms.

Ofcom Chief Executive Melanie Dawes said, “This marks a major advancement in online safety. We will be actively monitoring the industry to ensure adherence to these stringent safety standards.”

Companies found violating the rules could incur hefty fines of up to 10% of their global annual revenue. In cases of repeated or serious infractions, senior managers may face imprisonment, and Ofcom has the authority to seek court orders to block access to services that do not comply in the UK.

The new codes says that reporting and complaint mechanisms must be easily accessible and require high-risk platforms to implement hash-matching technology to detect and eliminate child sexual abuse material.

Meanwhile, Ofcom announced that additional regulations is likely to be announced in 2025, which will include actions to block accounts that share child sexual abuse material and the implementation of AI to tackle illegal content.

Technology Minister Peter Kyle said, "These codes connect the protections we have in the offline world with those online. Platforms must enhance their efforts, or Ofcom has my full backing to utilize its powers, including imposing fines and blocking websites."

Find your daily dose of All

Latest News including

Sports News,

Entertainment News,

Lifestyle News, explainers & more. Stay updated, Stay informed-

Follow DNA on WhatsApp. Cambodia, Thailand agree to ceasefire after Donald Trump's intervention, latter has one condition

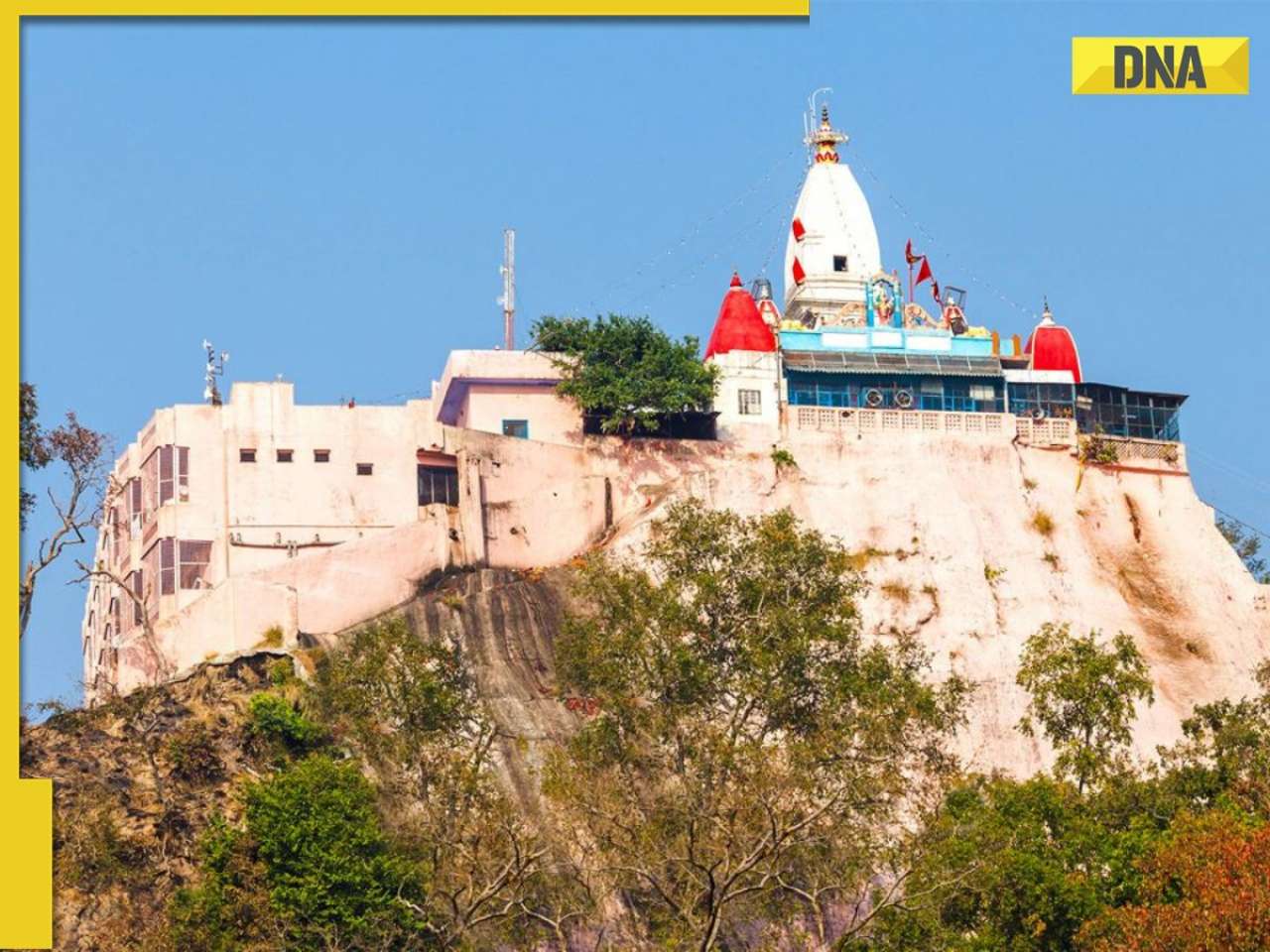

Cambodia, Thailand agree to ceasefire after Donald Trump's intervention, latter has one condition Stampede at Mansa Devi temple in Haridwar, 6 feared dead, several injured

Stampede at Mansa Devi temple in Haridwar, 6 feared dead, several injured Pakistan honours US General who praised it for...with prestigious Nishan-e-Imtiaz award, India opposed him for...

Pakistan honours US General who praised it for...with prestigious Nishan-e-Imtiaz award, India opposed him for... Japan’s fastest bullet train can cover Delhi to Varanasi in just 3.5 hours, check stoppages, route, will be operational from...

Japan’s fastest bullet train can cover Delhi to Varanasi in just 3.5 hours, check stoppages, route, will be operational from... Rishabh Pant to bat on day 5 of 4th Test vs England? Batting coach issues BIG update on India's vice captain's availability

Rishabh Pant to bat on day 5 of 4th Test vs England? Batting coach issues BIG update on India's vice captain's availability 7 stunning images of Galactic 'Fossil' captured by NASA

7 stunning images of Galactic 'Fossil' captured by NASA Other than heart attacks or BP : 7 hidden heart conditions triggered by oily foods

Other than heart attacks or BP : 7 hidden heart conditions triggered by oily foods 7 most captivating space images captured by NASA you need to see

7 most captivating space images captured by NASA you need to see AI-remagined famous Bollywood father-son duos will leave you in splits

AI-remagined famous Bollywood father-son duos will leave you in splits 7 superfoods that boost hair growth naturally

7 superfoods that boost hair growth naturally Tata Harrier EV Review | Most Advanced Electric SUV from Tata?

Tata Harrier EV Review | Most Advanced Electric SUV from Tata? Vida VX2 Plus Electric Scooter Review: Range, Power & Real-World Ride Tested!

Vida VX2 Plus Electric Scooter Review: Range, Power & Real-World Ride Tested! MG M9 Electric Review | Luxury EV with Jet-Style Rear Seats! Pros & Cons

MG M9 Electric Review | Luxury EV with Jet-Style Rear Seats! Pros & Cons Iphone Fold: Apple’s iPhone Fold Could Solve Samsung’s Biggest Foldable Problem | Samsung Z Fold 7

Iphone Fold: Apple’s iPhone Fold Could Solve Samsung’s Biggest Foldable Problem | Samsung Z Fold 7 Trump News: Congress Seeks Answers On Trump's Alleged Mediation In Operation Sindoor

Trump News: Congress Seeks Answers On Trump's Alleged Mediation In Operation Sindoor OpenAI CEO Sam Altman issues CHILLING warning, says conversations with ChatGPT are...

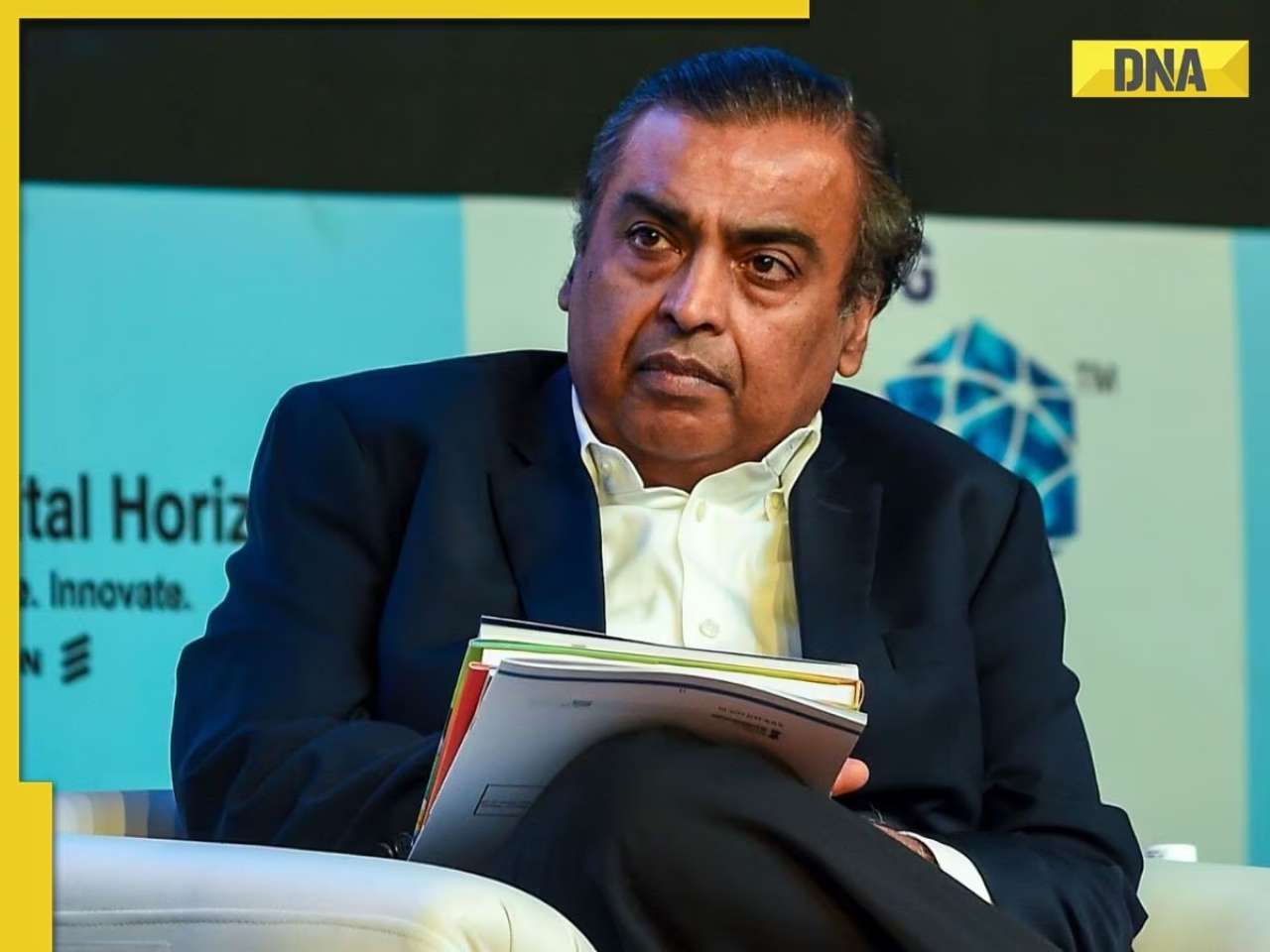

OpenAI CEO Sam Altman issues CHILLING warning, says conversations with ChatGPT are... This man becomes world's highest-earning billionaire in 2025, beats Elon Musk and Jeff Bezos, Mukesh Ambani is at...

This man becomes world's highest-earning billionaire in 2025, beats Elon Musk and Jeff Bezos, Mukesh Ambani is at... Meet man who built Rs 200,000,000 empire after two failed ventures, his business is..., net worth is Rs...

Meet man who built Rs 200,000,000 empire after two failed ventures, his business is..., net worth is Rs... Meet man, founder of app under govt lens, also owns Rs 1000000000 business, he is..., his educational qualification is...

Meet man, founder of app under govt lens, also owns Rs 1000000000 business, he is..., his educational qualification is... Jinnah wanted THIS Muslim man to be first Finance Minister of Pakistan, he refused, his son is on Forbes list of billionaires

Jinnah wanted THIS Muslim man to be first Finance Minister of Pakistan, he refused, his son is on Forbes list of billionaires Inside Ahaan Panday’s academic journey before his Bollywood debut in Saiyaara

Inside Ahaan Panday’s academic journey before his Bollywood debut in Saiyaara From Alia Bhatt to Anushka Sharma: 5 Bollywood moms who are redefining style

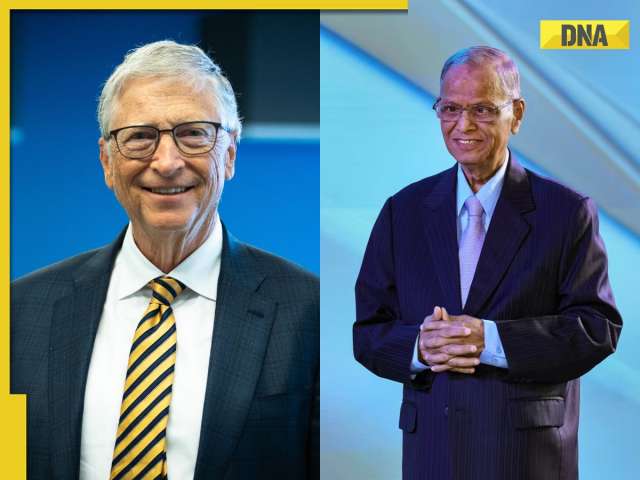

From Alia Bhatt to Anushka Sharma: 5 Bollywood moms who are redefining style Want to think like a billionaire? Try these 5 habits followed by Bill Gates, Narayana Murthy and others

Want to think like a billionaire? Try these 5 habits followed by Bill Gates, Narayana Murthy and others In Pics: Tara Sutaria brings fairytale magic to ramp in a shimmering golden gown at ICW 2025

In Pics: Tara Sutaria brings fairytale magic to ramp in a shimmering golden gown at ICW 2025 From Love in the Moonlight to Moon Embracing the Sun: 7 must-watch K-dramas

From Love in the Moonlight to Moon Embracing the Sun: 7 must-watch K-dramas Stampede at Mansa Devi temple in Haridwar, 6 feared dead, several injured

Stampede at Mansa Devi temple in Haridwar, 6 feared dead, several injured Japan’s fastest bullet train can cover Delhi to Varanasi in just 3.5 hours, check stoppages, route, will be operational from...

Japan’s fastest bullet train can cover Delhi to Varanasi in just 3.5 hours, check stoppages, route, will be operational from... PM Modi issues BIG statement on India-UK trade deal, says, 'It shows growing trust of...'

PM Modi issues BIG statement on India-UK trade deal, says, 'It shows growing trust of...' SHOCKING! 1-year-old child bites cobra to death in THIS state: 'He was spotted with...'

SHOCKING! 1-year-old child bites cobra to death in THIS state: 'He was spotted with...' India appeals to Thailand, Cambodia to prevent escalation of hostilities: 'Closely monitoring...'

India appeals to Thailand, Cambodia to prevent escalation of hostilities: 'Closely monitoring...' After IAS Jagrati Awasthi, marksheet of UPSC topper AIR 3 Donuru Ananya Reddy goes viral, she scored highest in...

After IAS Jagrati Awasthi, marksheet of UPSC topper AIR 3 Donuru Ananya Reddy goes viral, she scored highest in... Meet man, son of tea seller, who cracked UPSC exam thrice without any coaching to become IAS officer, his AIR was..., he is currently posted in...

Meet man, son of tea seller, who cracked UPSC exam thrice without any coaching to become IAS officer, his AIR was..., he is currently posted in... Indian Army Agniveer CEE 2025 result declared, here's how you can download it

Indian Army Agniveer CEE 2025 result declared, here's how you can download it Meet IPS officer, DU grad, who cracked UPSC exam in her third attempt, secured 992 out of 2025 marks with AIR..., now married to IAS...

Meet IPS officer, DU grad, who cracked UPSC exam in her third attempt, secured 992 out of 2025 marks with AIR..., now married to IAS... Meet woman, who studied MBBS, later cracked UPSC with AIR..., became popular IAS officer for these reasons, shares similarities with IAS Tina Dabi, she is from...

Meet woman, who studied MBBS, later cracked UPSC with AIR..., became popular IAS officer for these reasons, shares similarities with IAS Tina Dabi, she is from... Maruti Suzuki's e Vitara set to debut electric market at Rs..., with range of over 500 km, to launch on...

Maruti Suzuki's e Vitara set to debut electric market at Rs..., with range of over 500 km, to launch on... This is world’s most expensive wood, cost of 1kg wood is more than gold, its name is..., is found in...

This is world’s most expensive wood, cost of 1kg wood is more than gold, its name is..., is found in... This luxury car is first choice of Indians, even left BMW, Jaguar, Audi behind in sales, it is...

This luxury car is first choice of Indians, even left BMW, Jaguar, Audi behind in sales, it is... Kia India unveils Carens Clavis: Check features, design changes, price and more; bookings open on...

Kia India unveils Carens Clavis: Check features, design changes, price and more; bookings open on... Tesla CEO Elon Musk launches most affordable Cybertruck, but it costs Rs 830000 more than older version, it is worth Rs...

Tesla CEO Elon Musk launches most affordable Cybertruck, but it costs Rs 830000 more than older version, it is worth Rs...

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)